Voura

Projective voice visualizer

Jun 2022 ~ Jun 2022

📝 About the Project

Design project from HACKAIST — Design hackathon of KAIST & Imperial College London

Topic: Synaesthesia

I aimed to make projective voice visualizer that can be applied as identifier of person from the inspiration of “personality to color” translative property of the synaesthesia.

p5.js,p5.sound

🎥 Video

Inspiration of this project and possible application in real context has been demonstrated in this video.

Demo video of implemented VOURA.

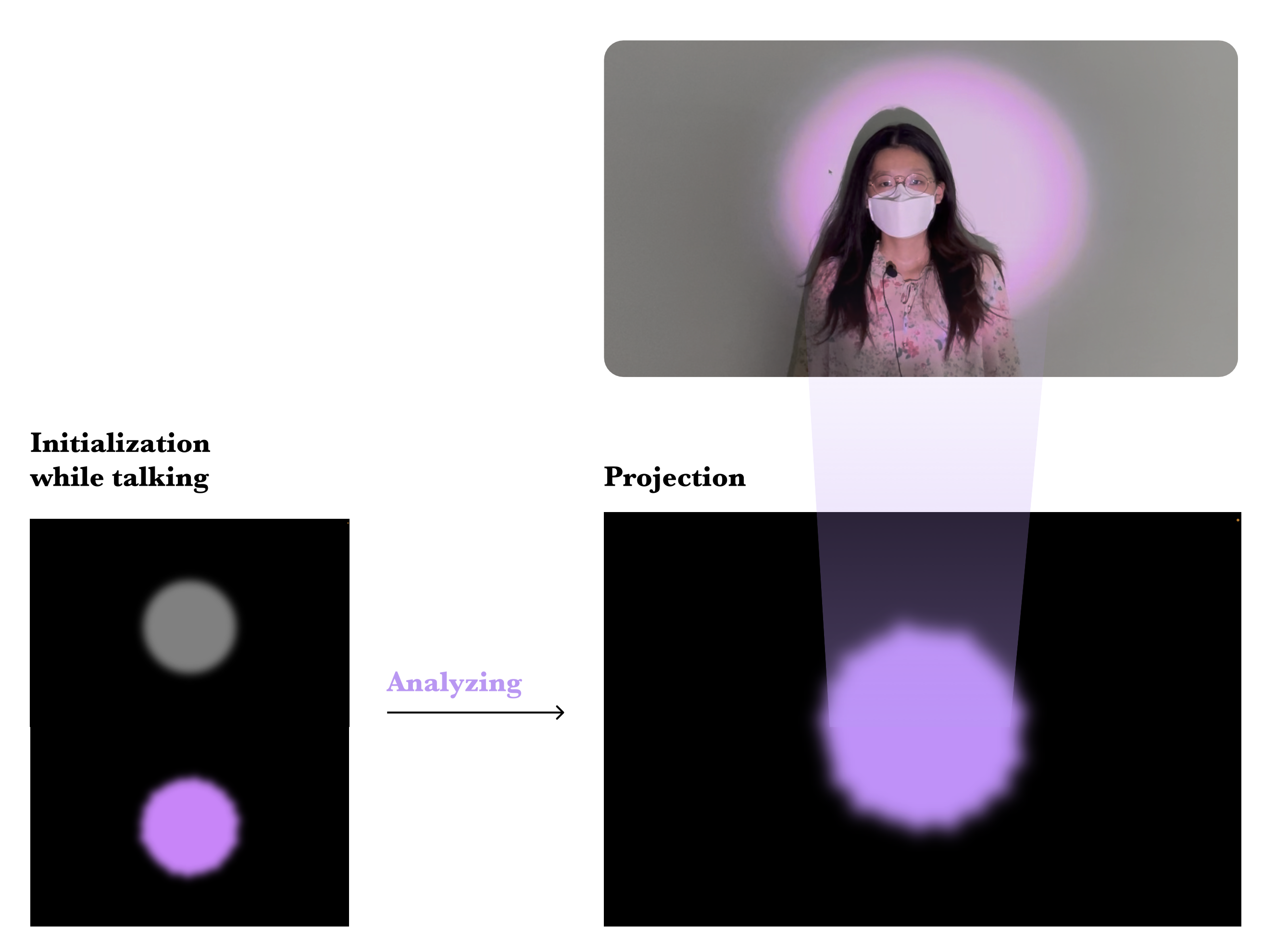

When the user talks, this projective VOURA changes its color. After initialization time, it fixed with certain color and size.

💬 How it works

After high-pass filtering of voice, I analyzed it with FFT. Several data from the result is used in conversion from voice to aura.

| Voice | Aura | Conversion |

|---|---|---|

| Tone | Hue of color | higher centroid → colder color |

| Pitch | Saturation of color | lower maxFreq → more saturated color |

| Intonation | Range of size | larger range of maxFreq → larger range of size |

| Volume | Evg. size | larger average of maxFreq → larger size |

- centroid = center of mass of FFT result

- maxFreq = the frequency level that has maximum volume in FFT result

This developed animation is projected on the user’s face with projector.

👩🏻💻 Developing Process

6/20 - 21

- Introduction of HACKAIST

- Ideation

6/22 - 23

- Concept decision

- Development with p5.js and projector

- Testing different people to iterate details

6/24

- Video filming and making

- Presentation slides

✍️ What I learn

🏃♀️First experience of design sprint

I had to follow whole process of design from brainstorming to implementation for 5 days. I learnt that it is most important to select a suitable project concept scale with the time limit.

✨ Finding values

This project did not begin from the problem definition as usual design projects. The process of finding values of it and match the application context was worth it.